Today’s BBBT presentation was by GoodData and I’m still waiting. Vendor after vendor tells us that they’re very special because they’re unique when compared to “traditional BI.” They don’t seem to get that the simple response is “so what?” Traditional BI was created decades ago, when offering software in the Cloud was not reasonable. Now it is. Every young vendor has a Cloud presence and I can’t imagine there’s a “traditional” company that isn’t moving to a Cloud offering. BI is not the Cloud. I want to hear why they have a business model that differentiates them from today’s competitions, not from the ones in the 1990s. I’m still waiting.

Almost all the benefits mentioned were not about their platform, they weren’t even about BI. What was mentioned were the benefits that any application get by moving to the Cloud. All the scalability, shareability, upgradability and other Cloud benefits do not a unique buying proposition make. Where they will matter is if GoodData implemented those techniques faster and better in the BI space than the many competitors who exist.

Serial founder, Roman Stanek wants his company to provide a strong platform for BI based on Open Source technology. The presentation, however, didn’t make clear if he really had that. He had the typical NASCAR slide, but only under NDA, with only a single company mentioned as an open reference. His technological vision seems to be good, but it’s too early to say whether or not the major investments he has received will pay off.

What I question is his business model. He and his VP of Marketing, Jeff Morris, mentioned that 2/3 of their revenue comes from OEM agreements, embedding their platform into other applications. However, his focus seems to be on trying to grow the other third, the direct sales to the Fortune 2000. I’m not sure that makes sense.

Another business model issue is that the presenters were convinced that the Cloud means they can provide a single version of product to all customers. They correctly described the headaches of managing multiple versions of on-premises software (even if they avoided saying “on-premise” only a third of the time). However, the reason that exists is because people don’t want to switch from comfortable versions at the speed of the vendor. While the Cloud does allow security and other background fixes to easily update to all customers, any reasonable company will have to provide some form of versioning to allow customers a range of time to convert to major upgrades.

A couple of weeks ago, 1010data went the other direction, clearly admitting that customers prefer that. I didn’t mention that in my blog post on that presentation, even though I thought they went too far in the other direction of too many versions, but combined with GoodData’s thinking there should only be one, now’s as good a time as any to mention that. Good Cloud practices will help minimize the number of versions that need to be active for your customers, but it’s not reasonable to think that will mean a single version.

At the beginning of the presentation, Roman mentioned a company, as a negative reference: Crystal Reports. At this point, I don’t think that comparison is at all negative. Nothing that GoodData showed us led me to believe that they can really get access to the massively heterogeneous data sources in true enterprise business. He also showed nothing that indicates an ability to provide top level analysis and display as required in that market. However, providing OEM partners a quick and easy way to add basic BI functions to their products seems to be a great way to build market share and bring in revenue. While Crystal Reports seems archaic, it was the right product with the right business plan at the right time, and the product became the de facto standard for many years.

The presentation left me wondering. There seems to be a sharp team but there wasn’t enough information to see if vision and product have gelled to create a company that will succeed. The company’s been around since 2008, just officially released the product, yet have a number of very interesting customers. That can’t be based just on the strong reputation of Mr. Stanek, there has to be meat there. How much, though, is open to question based on this presentation. If you’re considering an operational data store in the Cloud, talk with them. If you want more, get them to talk to you more than they talked to us.

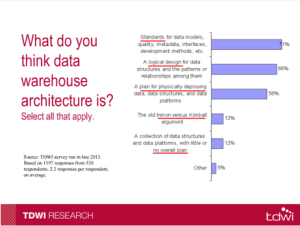

Another key point was made as to the evolving nature of the definition of data warehousing. Twenty years ago, it was about creating the repository for combining and accessing the data. That is now definition number three. The top two responses show a higher level business process and strategy in place than “just get it!”Where I have a problem with the presentation is when Mr. Russom stated that analytics are different than reporting. That’s a technical view and not a business one. His talk contained the reality that first we had to get the data, now we can move on to more in depth analysis, but he still thinks they’re very different. It’s as if there’s a wall between basic “what’s the data” and “finding out new things,” concepts he said don’t overlap. Let’s look at the current state of BI. A “report” might start with a standard layout of sales by territory. However, the Sales EVP might wish to wander the data, drilling down and slicing & dicing to understand things better by industry in territory, cities within and other metrics across territories. That combines what he defines as separate reporting and data discovery.

Another key point was made as to the evolving nature of the definition of data warehousing. Twenty years ago, it was about creating the repository for combining and accessing the data. That is now definition number three. The top two responses show a higher level business process and strategy in place than “just get it!”Where I have a problem with the presentation is when Mr. Russom stated that analytics are different than reporting. That’s a technical view and not a business one. His talk contained the reality that first we had to get the data, now we can move on to more in depth analysis, but he still thinks they’re very different. It’s as if there’s a wall between basic “what’s the data” and “finding out new things,” concepts he said don’t overlap. Let’s look at the current state of BI. A “report” might start with a standard layout of sales by territory. However, the Sales EVP might wish to wander the data, drilling down and slicing & dicing to understand things better by industry in territory, cities within and other metrics across territories. That combines what he defines as separate reporting and data discovery.