Today a webinar was hosted by Database Trend and Applications. While there are important things to talk about, I’ll start with the amusing point of the inverse relationship between company size and presenter title found in every webinar, but wonderfully on display here. The three presenters were:

- Mark Theissen, CEO, Cirro

- Peter Hoopes, VP/GM, BIRT Analytics Division, Actuate

- Amit Patel, Program Director, Data Warehouse Solutions Marketing, IBM

The topic was “Accelerating your Analytics for Faster Insights.” That is a lot to cover in less than an hour, made more brief by a tag team of three people from different companies. I must say I was pleasantly surprised with how well they integrated their messages.

Mark Theissen was up first. There were a lot of fancy names for what Cirro does, but think ETL as it’s much easier. Mark’s point is that no single repository can handle all enterprise data even if that made sense. Cirro’s goal is to provide on-demand distributed analytics, using federation to link multiple data sources in order to help businesses analyze more complete information. It’s a strong point people have forgotten in the last few years during the typical “the latest craze will solve everything” focus on Hadoop and minimizing the role of getting to multiple sources.

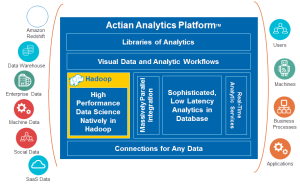

Peter Hoopes then followed to talk about doing the analytics. One phrase he used should be discussed in more detail: “speed wins.” So many people are focused on the admittedly important area of immediate retail feedback on the web and with mobile devices. There, yes, speed can win. However, not always. Sometimes though helps too. That’s one reason why complex analysis for high level business strategy and planning is different that putting an ad on a phone as you walk by a store. There are clear reasons for speed, even in analytics, but it should not be the only focus in a BI decision.

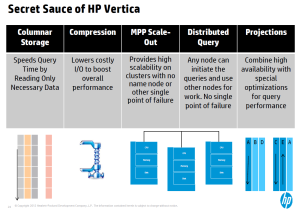

IBM’s Amit Patel then came on to discuss the meat of the matter: DB2 Blu. This is IBM’s foray into in-memory, columnar databases. It’s a critical ad to the product line. There are advantages to in-memory that have created a need for all major players to have an offering, and IBM does the “me too!” well; but how does IBM differentiate itself?

As someone who understands the need for integration of transaction and analytic systems and agrees both need to co-exist, I was intrigued by what Amit had to say. Transactions going into normal DB2 environment while being shadowed into columnar BLU environment to speed analytics. Think about it: Transactions can still be managed with the row-oriented technologies best suited for them while the information is, in parallel, moved to the analytics database that happens to be in memory. It seems to be a good way to begin to blend the technologies and let each do what works best.

For a slightly techhie comment, I did like what Mr. Patel was saying about IBM’s management of memory and CPU. After all, while IBM is one of the largest software vendors in the world, too many folks forget their hardware background. One quick mention in a sentence about “hardware vendors such as Intel and IBM…” was a great touch to add a message that can help IBM differentiate its knowledge of MPP from that of pure software companies. As a marketing guy, I smiled big time at the smooth way that was brought up.

Summary

The three presenters did a good job in pointing out that the heterogeneous nature of enterprise data isn’t going away, rather it’s expanding. Each company, in its own way, put forward how it helps address that complexity. Still, it takes three companies.

As the BI market continues to mature, the companies who manage to combine the enterprise information supply chain components most smoothly will succeed. Right now, there’s a message being presented by three players. Other competitors also partner for ETL, data storage and analytics. It sounds interesting, but the market’s still young. Look for more robust messages from single vendors to evolve.