Data Lakes, the renamed ODS, aren’t the only solution for accessing data. Think actual need, understand supporting metadata, then build your data ingestion plan. Read my latest TechTarget column.

Tag Archives: cloud computing

Webinar Review: Oracle Big Data Cloud, Understanding Business

People at technology startups love to call the industry giants dinosaurs. The analogy fails for a number of reasons. The funniest is that the dinosaurs existed for many millions of years. As the large companies exist now, are the startups are saying the big companies will only disappear if we’re hit by a meteor? Companies became large by filling a need. While many might not be as nimble, their experience, especially in enterprise software, means they often see the needs of the business community while the small companies are focused too much on their “cool” technology.

This week’s Oracle webinar, hosted by the DBTA, was a good example of that. The speakers were Rich Clayton, VP Business Analytics Product Group, and Omri Traub, VP Software Development, and the subject was, no surprise, Oracle Big Data Cloud Service (OBDC. Yeah, I know. Too close to ODBC…). Before we get into the details, people need to be aware that Oracle is fully committed to the cloud, as pointed out in a recent advertorial in Forbes. Oracle is clearly competing with Amazon for enterprise cloud business. Big data is only one part of that.

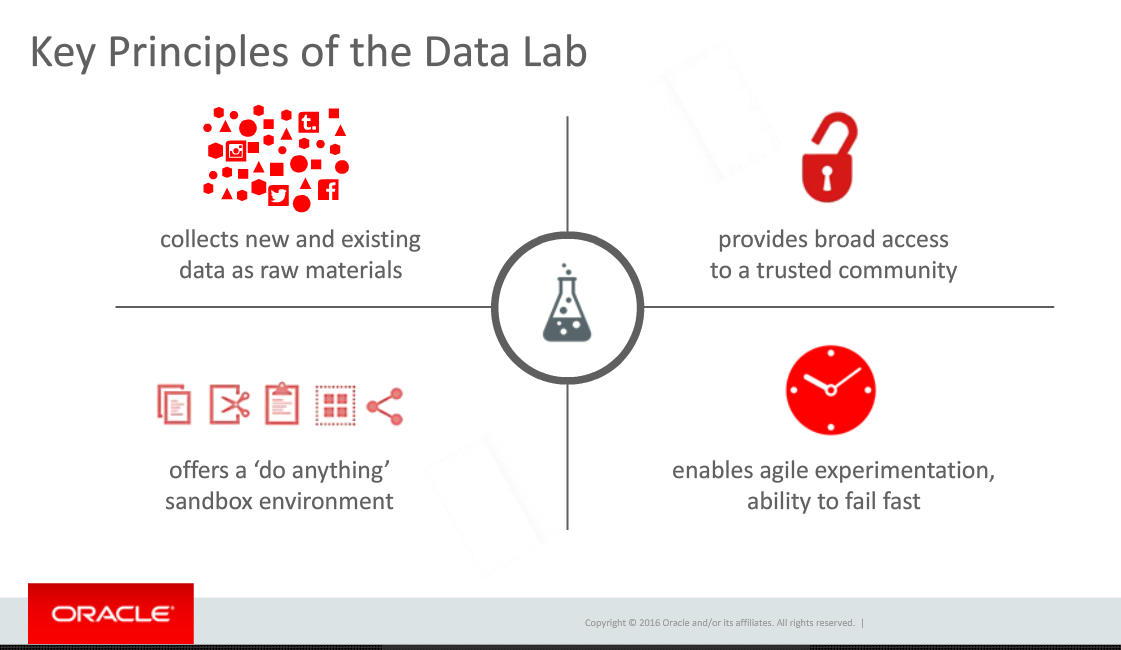

Rich Clayton began the presentation by pointing towards Thomas Edison’s laboratory as an example of using the ideas from many people to not only invent things but also to figure out how to market those inventions. He brought that directly into the evolution of corporate data labs. The biggest problem, Rich stated, is that that labs are usually only populated by very technical people while they require a broader array of talents. That requirement is one of the data labs principles he defined and one I’ve also described as the missing component of many corporate data labs.

A related problem is that most products are so complex and silo’d that very technical people are needed. At this stage in business intelligence and big data, that’s the horse that needs to be addressed before the broad access cart can move.

Omri Traub then took over for the demonstration portion of the presentation. Unfortunately, he unintentionally proved the point about technical folks missing business needs by the setup he used for the demonstration. The demo was built around an enormous amount of information on New York City taxi information. While manipulating a billion record data set is cool and powerful, he never presented a business message. He pointed to the large volume of data, talked about other data sources he combined, and then played with the data to show correlations.

The problem? Omri, claimed we were gaining insight. Correlations aren’t insight. Understanding how those correlations might impact your business and ideas how to adapt business to meet what you find is insight. Nothing in the demonstration pointed towards insight.

Fortunately, Rich Clayton earlier had given a couple of case studies showing business insight gained by OBDC early customers. It would have been much better if Mr. Traub had focused on one of those cases or something similar.

The best point of the demonstration was when Omri showed how, in the middle of playing with some relationships, he easily incorporated some analysis created by a different person. As mentioned above, collaboration is critical and it looks like Oracle hasn’t limited that to just a marketing message but has worked to make sure that Oracle’s product helps the team. As many companies claim to do that and it was only an overview, your mileage might vary. Make sure when you talk to them to follow through and see whether the collaboration (not to mention the entire product…) meets your needs.

The final section was the Q&A. I’m a marketing person, so I have to be honest and state that it sounded like canned questions they wanted to address, as there was way too much about the full Oracle ecosystem brought into discussion at this point compared to what I’d expect from customers. Still, there was one important point.

A question was asked about what advanced analytics might be added. Mr. Taub had the perfect response. After quickly mentioning that, yes, Oracle was always looking at advanced analytics and how to add them, he made a much more important point. Collobaration is key and OBDC is designed to get business people involved. All analytics need to be added in a usable manner, in a way that is understandable and can be leveraged by more people than just the technical resources.

That is the critical viewpoint that a large, enterprise focused company can bring to BI, the cloud and big data. That’s why it’s foolish to write off the large companies, the ones with expertise in not just technology, but in business and business relationships. They might not move as fast, but they can move to the right places with the right products and the right business messages.

BI in the Cloud: Article on TechTarget

My latest article can be found here: http://searchbusinessanalytics.techtarget.com/feature/Business-intelligence-in-the-cloud-gives-boost-to-BI-process.

Looker at the BBBT: A New Look at SQL Performance

The most recent BBBT presentation was by Looker. Lloyd Tabb, Founder & CTO, and Zach Taylor, Product Marketing Manager, showed up to display yet another young company’s interesting technology.

Looker’s technology is an application server that sits above relational databases to provide faster, more complex queries. They’ve developed their own language, LookML to help with that. That’s no surprise, as Lloyd is a self-described language guy.

It’s also no surprise that the demos, driven by both Lloyd and Zach, were very coding heavy. Part of the reason that very technical focus exists is, as Mr. Tabb stated, that Looker thinks there are two groups of users: Coders who build models and business managers who use the information. There is no room in that model for the business analyst, the person who understands who to communicate a complex business need to the coders and how to help the coders deliver something that is accessible to and understandable by the information consumer.

How the bifurcation was played out in the demonstration was through an almost exclusive focus on code, code and more code, with a brief display of some visualization technology. The former was very good while the later wasn’t bad but, to fit with their mainly technology focus, had complex visualizations without good enough legends – they were visualizations that would be understood by technical people but need to be better explained for the business audience they claim to address.

As an early stage company, that’s ok. The business intelligence (BI) market is still young and very fragmented. You can get different groups in large companies using different BI tools. While Looker talks about 300 customers, as with most companies of their size it could only be those small groups. If they’re going to grow past those groups, they need to focus a bit more in how to better bridge technology and business.

They also have a good start in attracting the larger market because they support both cloud and on-premises systems. The former market is growing while the later isn’t going away. Providing the ability for their server to run either place will address the needs of companies on either side of the divide.

RDMS ≠ SQL

One key to their system is they don’t move data. It stays resident on the source systems. Those could be operational systems, data warehouses, an ODS or whatever. What they must have is SQL. When asked about Hadoop and other schema-on-write systems, the Looker team stated they are an RDMS based application but they’ll work on anything with SQL access. I have no problem with the technology, but they need to be very clear about the split.

SQL came from the relational world, but as they pointed out in an aside, it isn’t limited to that. They should drop the RDMS message and focus on SQL. As Lloyd Tabb said, “SQL is the right abstraction.” What I don’t know if he understands, being focused on technology and having those biases, is it isn’t the right abstraction because of some technical advantage but because it’s the major player. McDonalds isn’t the best burger because it has the most stores. SQL might not be the best access method, but it’s the one business knows and so it’s the one the newer database companies and structures can’t ignore.

Last year, the BBBT heard from multiple companies including Actian and EXASOL, companies focused on providing SQL access to Hadoop. That’s as important as what Looker is doing. The company that manages to do both well with jump ahead of the pack.

Summary

Looker is a good, young company with some technical advantages that can greatly improve the performance of SQL queries to business databases and provides a basic BI front end to display the results. I’m not sure they have the resources to focus on both, and I think the former have the clearest advantage in the marketplace. Unless they have more funding and a strong management team that can begin to better understand the business side of the market, they will have problems addressing the visualization side of BI. They need to keep improving their engine, spread it to access more data sources, and partner with visualization companies to provide the front end.

IBM and the Cloud? Don’t write it off

At today’s investor meeting, IBM execs announced a target of $40 billion in revenue for cloud, analytics, mobile, social and security software by 2018. I’ve expect to see folks talk about dinosaurs not being able to turn fast enough and predicting failure to meet that goal. I don’t know if they can do it, but to make such ardent predictions you’d have to ignore history.

Mid-sized Unix servers came along and folks talked about IBM going away.

IBM blew a chance to own PC industry and the same predictions followed them.

Linux? Freeware was going to destroy the mainframe. Oops, Linux partitions run on mainframes.

Now we know the large growth of the cloud. Much of it has been on commodity boxes. However, as data gets larger, analytics more powerful and networks become more robust, there’s clearly space for a company with such a strong history in hardware, services and adapting to changes.

After all, too many people still think of IBM as a hardware company. While it’s too early for the 2014 report, you can check the 2013 Annual Report and check page 7. Look at what a tiny percentage of the bar is hardware. Software and services are fairly even in splitting the vast majority of the revenue stream.

It’s a strong goal and will take a lot of pushing. How many politely phrased “re-orgs” will happen to lay off staff? Who knows? Will they succeed? No clue. All I expect is that they’ll continue to grow and nobody should count them out.

DataHero at the BBBT: A Startup Getting It Right

First, on a tangent not directly focused on the product: Thank you Chris Neumann, CEO or DataHero. After hearing presenters from multiple companies consistently use the wrong words over the last few months, you used both premise and premises in the appropriate places. Thanks!

As you might gather, Wednesday’s presentation at the BBBT was by DataHero. A fairly young company, less than three years old, DataHero is focused on “Delivering a self-service Cloud BI solution that enables enterprise and SMB users to analyze and visualize their SAAS-based data without IT.”

Self-service BI is what almost all the players, both new and mature companies, are trying to provide these days. This just means they’re another player in attempting to help business knowledge workers to connect to data, analyze it and gather useful and actionable information without heavy intervention by business analysts and IT.

Cloud is also where everyone’s moving since it has so many advantages to all areas of software. DataHero, as a small company, isn’t just in the Cloud. They’ve smartly decided to begin by focusing on public Cloud applications with accessible API’s.

While that initially simplifies things, the necessity to handle complexity still exists in that world. Mike Ferguson, another BBT member analyst, pointed out that many of his clients have multiple, customized Salesforce.com instances and that’s bringing the upgrade issues seen in on-premises systems into the Cloud world. Chris acknowledges that and understands the need to grow to handle the issue, but knows that at the current size of DataHero there’s enough of a market for an initially more focused solution.

A strategic issue comes up with the basic nature of the Cloud. Mr. Neumann mentioned Cloud being opposed to centralized data, but that’s not quite so. Depending on how Cloud systems are set up, they can help or hinder centralization of data. However, right now he is accurate in that most of the growth of Cloud is departmental in nature. It’s also further blurring the always fuzzy line between enterprise and SMB markets by providing applications that both groups can leverage.

Another area that shows thought in their growth strategy is entry into new market. Chris is clear that they dip their toes into an arena, check reactions, and if positive then try to partner with as many companies in the space as possible to maintain neutrality. That means they don’t get locked into the first vendor the first client wants to work with, regardless of market control, leaving flexibility for customers. Their partner page, though young, clearly shows that strategy in effect. That’s a good move and I wish more vendors would think that way.

Another key growth issue is data cleansing. Right now, DataHero does none, expecting that the source system provides that capability. However, as clients use more and more source systems, there’s a cleansing need to clarify data clashes from different systems. That’s something the team at DataHero says they’re aware of while, again, that’s future growth (no time frames, as per legal sanity…).

The demo was very interesting. The other founder, Jeff Zabel, has a strong history in designing interfaces for software in vehicles, meaning usability really matters. That can be seen with a very clear and simple interface. It is easy to use. However, as pointed out by many other companies, 80% of business data has a location component and many DataHero vendors are far ahead of them in the area of geospatial information. That’s a key area they’ll have to improve.

Summary

DataHero is a young company with a young product. The key is that they aren’t just looking at their cool product and customizing solely on first sales. They have thought through a clear growth strategy. The BI tool is clearly fully fledged for the market segment they’ve chosen for initial release and they have thought through their growth strategy in far more detail than I’ve seen in other vendors who have presented at the BBBT.

If they execute their vision, and I see no reason why they wouldn’t, the folks at DataHero have a bright future.

NuoDB at the BBBT: Another One Bringing SQL to the Cloud

Today’s presentation in front of the BBBT was by NuoDB’s CTO, Seth Proctor. NuoDB is a small company with big investments. What makes them so interesting? It’s the same thing as in many of the other platform presenters at the BBBT. How do we get real databases in the Cloud?

Hadoop is an interesting experiment and has clearly brought value to the understanding of massive amounts of unstructured data. The main value, though, remains that it’s cheap. The lack of SQL means it’s ok for point solutions that don’t stress its performance limitations. Bringing enterprise database support to the cloud is something else.

The main limitation is that Hadoop and other unstructured databases aren’t able to handle transactional systems while those still remain the major driver in operating businesses.

NuoDB has redesigned the database from the ground up to be able to run distributed across the internet. They’ve created a peer-to-peer structure of processes, with separate processes to manage the database and SQL front end transaction issues.

Seth pointed out that they ““Have done nothing new, just things we know put together in a new way.” He also pointed out they have patents. My gripe about patents for software is an issue for another day, but that dichotomous pairing points to one reason (Apple’s patent on a rounded rectangle is another example of the broken patent system, but off the soap box and onwards…).

It’s clear that old line RDMS systems were designed on major, on-premise servers. The need for a distributed system is clear and NuoDB is on the forefront of creating that. One intriguing potential strength, one about which there wasn’t time to discuss in the presentation, is a statement about the object-oriented structure needed for truly distributed applications.

Mr. Proctor stated that the database schema is in object definitions, not hard coded into the database. He added that provides more flexibility on the fly. What it also could mean is that the schema isn’t restricted to purely RDBMS schemas and that future versions of their database could support columnar and even unstructured database support. For now, however, the basic ability to change even a standard row-based relational database on the fly without major impacts on performance or existing applications is a strong benefit.

As the company is young and focused on the distributed aspects of performance, it was also admitted that their system isn’t one for big data, even structures. They’re not ready for terabytes, not to mention petabytes of data.

The Business

That’s the techie side, but what about business?

The company is focused on providing support for distributed operational systems. As such, Seth made clear they haven’t looked at implementations supporting both operational and analytical systems. That means BI is not a focus and so the product might not be the right system for providing high level business insight.

In addition, while I asked about markets I mainly got an answer about Web sites. They seem to think the major market isn’t Global 1000 businesses looking for link distributed operational systems but that Web commerce sites are their sweet spot. One example referred to a few times was in transactional systems for businesses selling across a country or around the world. If that’s the focus, it’s one that needs to be made more explicit on their web site, which really doesn’t discuss markets in the least.

It’s also an entry into the larger financial markets space. It and medical have always been two key verticals for new database technologies due to the volumes of information. That also means they need to prioritize the admitted lack of large database support or they’ll hit walls above the SMB market.

The one business thing the bothers me is their pricing model. It’s based on the number of hosts. As the product is based on processes, there’s no set number of processes per host. In addition, they mentioned shared hosting, places such as AWS, where hosts may be shared by multiple of NuoDB’s customers or where load balancing might take your processes and have them on one host one day and multiple hosts the next.

Host base pricing seems to be a remnant of on-premises database systems that Cloud vendors claim to be leaving. In a distributed, internet based setup, who cares how big the host is, where the host is, or anything else about the host? The work the customer cares about is done by the processes, the objects containing the knowledge and expertise of NuoDB, not the servers owned by the hosting firm. I would expect that Cloud companies would move from processors to process.

Summary

NuoDB is a company focused on reinventing the SQL database for the Cloud. They have significant investment from the VC and business markets. However, it would be foolish to think that Oracle, IBM and other existing mainstream RDBMS vendors aren’t working on the same thing. What NuoDB described to the BBBT used most of the right words from the technology front and they’re ramping up their development based on the investments, but it’s too early to say if they understand their own products and markets enough to build a presence for the long term.

They have what looks like very interesting technology but, as I keep repeating in review after review, we know that’s not enough.