Data Lakes, the renamed ODS, aren’t the only solution for accessing data. Think actual need, understand supporting metadata, then build your data ingestion plan. Read my latest TechTarget column.

Tag Archives: hadoop

Diyotta: Data integration for the enterprise

I’m still catching up and reviewed a video of last month’s Diyotta presentation to the BBBT. The company is another young, founded in 2011, data integration company working to take advantage of current technologies to provide not just better data integration but also better change management of modern data infrastructures. In many ways, they’re similar to another company, WhereScape, which I discussed last year. Both are young and small, while the market is large and the need is great.

The presentation was given by Sanjay Vyas, CEO, and John Santaferraro, CMO. The introduction by Sanjay was one of the best from a small company founder that I’ve seen in a long time. He gave a brief overview of the company, its size, it’s global structure (with HQ in Charlotte, NC, and two offshore development centers). Then he went straight to what most small companies leave for last: He presented a case study.

My biggest B2B marketing point is that you need to let the market know you understand it. Far too many technical founders spend their time talking about the technology they built to solve a business problem, not the business problem that was addressed by technology. Mr. Vyas went to the heart of the matter. He showed the pain in a company, the solution and, most importantly, the benefits. That is what succeeds in business.

It also wasn’t an anonymous reference, it was Scotiabank, a leading Canadian bank with a global presence. When a company that large gives a named reference to a startup as small as is Diyotta, you know the firm is happy.

John Santaferraro then took over for a bit with mostly positive impact. While he began by claiming a young product was mature because it’s version 3.5, no four year old firm still working on angel investments has a fully mature product. From the case study and what was demo’d later, it’s a great product but it’s clear it’s still early and needs work. There’s no need to oversell.

The three main markets John said Diyotta aims at are:

- Big data analytics.

- Data warehouse modernization.

- Hybrid data integration including cloud and on-premises (though John was another marketing speaker who didn’t want to use the “s” at the end).

While the other two are important, I think it’s the middle one that’s the sweet spot. They focus on metadata to abstract business knowledge of sources and targets. While many IT organizations are experimenting with Hadoop and big data, getting a better understanding and improved control over the entire EDW and data infrastructure as big data is added and new mainline techniques arrive is where a lot more immediate pain exists.

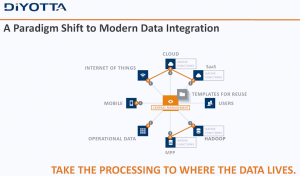

Another marketing miss that could have incorporated that key point was when Mr. Santaferrero said that the old ETL methods no longer work because “having a server in the middle of it … doesn’t exist anymore.” The very next slide was as follows.

Diyotta still seems to have a server in the middle, managing the communications between sources and targets through metadata abstraction. The little “A’s” in the data extremities are agents Diyotta uses to preprocess requests locally to optimize what can be optimizes natively, but they’re still managed by a central system.

The message would be more powerful by explaining that the central server is mediating between sources and targets, using metadata, machine learning and other modern tools, to appropriately allocate processing at source, in the engine or in the target in the most optimal way.

While there’s power in the agents, that technology has been used in other aspects of software with mixed results. One concern is that it means a high need for very close partnerships with the systems in which the agents reside. While nobody attending the live presentation asked about that, it’s a risk. The reason Sanjay and John kept talking about Netezza, Oracle and Teradata is because those are the firms whose products Diyotta has created agents. Yes, open systems such as Hadoop and Spark are also covered, but agents do limit a small company’s ability to address a variety of enterprises. The company is still small, so as long as they focus on firms with similar setups to Scotiabank, they have time to grow, to add more agents and widen their access to sources; but it’s something that should be watched.

On the pricing front, they use pricing purely based on the hub. There’s no per user or per connector pricing. As someone who worked for companies that used pricing that involved connectors, I say bravo! As Mr. Vyas pointed out, their advantage is how they manage sources and targets, not which ones you want them to access. While connecting is necessary, it’s not the value add. The pricing simplifies things and can save money compared with many more complex pricing schemes that charge for parts.

The final business point concerns compliance. An analyst in the room (Sorry, I didn’t catch the name) asked about Sarbanes-Oxley. The answer was that they don’t yet directly address compliance but their metadata will make it easier. For a company that focuses on metadata and whose main reference site is a major financial institution, it would serve their business to add something to explicitly address compliance.

Summary

Diyotta is a young company addressing how enterprises can leverage big data as target and source alongside the existing infrastructure through better metadata management and data access. They are young and have many of the plusses and minuses that involves. They have some great technology but it’s early and they’re still trying to figure out how to address what market.

The one major advantage they have, given what I’ve seen in only a two hour presentation, is Sanjay Vyas. Don’t judge a startup on where they are now or where you think they need to be. Judge them on whether or not management seems capable of getting from point A to point B. Listening to Mr. Vyas, I heard a founder who understands both business and technology and will drive them in the direction they need to go.

IBM, you BM, we all BM for … Spark!

IBM at BBBT

A recent presentation by IBM at the BBBT was interesting. As usual, it was more interesting to me for the business information than the details. As unusual, they did a great job in a balanced presentation covering both. While many presentations lean too heavily in one direction or the other, this one covered both sides very well.

The main presenter was Harriet Fryman, VP of Marketing, IBM Analytics Platform. Adding information during the presentation were Steven Sit, Director of Product Management, Open Source Based Analytics Systems, and Steve Beier, Program Director, Spark Technology Center.

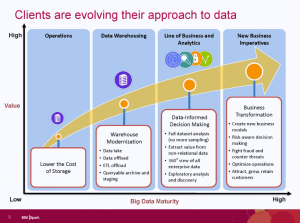

The focus of the talk was IBM’s commitment to Apache Spark. Before diving deep into the support, Ms. Fryman began by talking about business’ evolving data needs. Her key point is that “we all do data hording,” that modern technologies are allowing us to horde far more data than ever before, and that better ways are needed to get value out of the data.

She then proceeded to define three key aspects of the growth in analytics:

- Applying analytics in more parts of business.

- Understand the time value of data.

- The growth of machine learning and cognitive systems.

The second two overlap, as the ability to analyze large volumes of data in near real-time means a need to have systems do more analysis. The following slide also added to IBM’s picture of the changing focus on higher level information and analytics.

The presentation did go off on a tangent as some analysts overthought the differences in the different IBM groups for analytics and for Watson. Harriet showed great patience in saying they overlap, different people start with different things and internal organizational structures don’t impact IBM’s ability to leverage both.

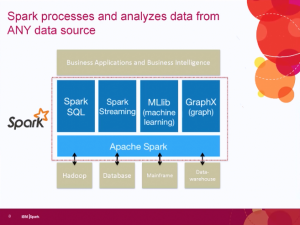

The focus then turned back to Spark, which IBM sees as the unifying layer for data access. One key issue related to that is the Spark v Hadoop debate. Some people seem to think that Spark will replace Hadoop, but the IBM team expressed clear disagreement. Spark is access while Hadoop is one data structure. While Hadoop can allow for direct batch processing of large jobs, using Spark on top of Hadoop allows much more real time processing of the information that Hadoop appropriately contains.

One thing on the slide that wasn’t mentioned but links up with messages from other firms, messages which I’ve supported, is that one key component, in the upper left hand corner of the slide, is Spark SQL. Early Hadoop players were talking about no-SQL, but people are continuing to accept that SQL isn’t going anywhere.

Well, most people. At least fifteen minutes after this slide was presented, an attending analyst asked about why IBM’s description of Spark seemed to be similar to the way they talk about SQL. All three IBM’ers quickly popped up with the clear fact that the same concepts drive both.

While the team continued to discuss Spark as a key business imitative, Claudia Imhoff asked a key question on the minds of anyone who noticed huge IBM going to open source: What’s in it for them? Harriet Fryman responded that IBM sees the future of Spark and to leverage it properly for its own business it needed to be part of the community, hence moving SystemML to open source. Spark may be open source, but the breadth and skills of IBM mean that value added applications can be layered on top of it to continue the revenue stream.

Much more detail was then stated and demonstrated about Spark, but I’ll leave that to the more technical analysts and vendor who can help you.

One final note put here so it didn’t distract from the main message or clutter the summary. Harriet, please. You’re a great expert and a top marketing person. However, when you say “premise” instead of “premises,” as you did multiple times, it distracts greatly from making a clear marking message about the cloud.

Summary

IBM sees the future of data access to be Apache Spark. Its analytics group is making strides to open not only align with open source, but to be an involved player to help the evolution of Spark’s data access. To ignore IBM’s combined strength in understanding enterprise business, software and services is to not understand that it is a major player in some of the key big data changes happening today. The IBM Spark initiative isn’t a marketing ploy, it’s real. The presentation showed a combination of clear business thought and strategy alongside strong technical implementation.

Marketing lesson: How to cram too many vendors into too short a timeframe

I’ll start by being very clear: This is a slam on bad marketing. Do not take this column as a statement that the products have problems, as we didn’t see the products.

Database Trends and Application magazine/website held a webinar. The first clue there was something wrong is that an hour long seminar had three sponsors. In a roundtable forum, that could work, and the email mentioned it was a roundtable, but it wasn’t. Three companies, three sequential presentations. No roundtable.

It was titled “The Future of Big Data: Hybrid Architectures and Best-of-Breed”. The presenters were Reiner Kappenberger, Global Product Manager, HP Security Voltage, Emma McGrattan, SVP Engineering, Actian, and Ron Huizenga, ER/Studio Product Manager, Embarcadero. They are three interesting companies, but how would the presentations fit together?

They didn’t.

Each presenter had a few minutes to slam through a pitch, which they did with varying speeds and content. There was nothing tying them into a unified vision or strategy. That they all mentioned big data wasn’t enough and neither was the time allotted to hear significant value from any of them.

I’ll burn through each as the stand-alone presentations they were.

HP Security Voltage

Reiner Kappenberger talked about his company’s acquisition by HP earlier this year and the major renaming from Voltage Security to HP Security Voltage (yes, “major” was used tongue-in-cheek). Humor aside, this is an important acquisition for HP to fill out its portfolio.

Data security is a critical issue. Mr. Kappenberger gave a quick overview of the many levels of security needed, from disk encryption up to authentication management. The main feature focus on Reiner’s allotted time is partial tokenization, being able to encrypt parts of a full data field. For instance, disguising the first five digits of a US Social Security number while leaving the last four visible. While he also mentioned tying into Hadoop to track and encrypt data across clusters, time didn’t permit any details. For those using Hadoop for critical data, you need to find out more.

The case studies presented included a car company’s use of both live, Internet of Things feeds and recall tracking but, again, there just wasn’t enough time.

Actian

The next vendor was Actian, an analytics and business intelligence (BI) player based on Hadoop. Emma McGrattan felt rushed by the time limit and her presentation showed that. It would have been better to slow down and cover a little less. Or, well, more.

For all the verbage it was almost all fluff. “Disruption” was in the first couple of sentences. “The best,” “the fastest,” “the most,” and similar unsubstantiated phrases flowed like water. She showed an Actian built graph with product maturity and Hadoop strength on the two axis and, as if by magic, the only company in the upper right was Actian.

Unlike the presentations before and after hers, Ms. McGrattan’s was a pure sales pitch and did nothing to set a context. My understanding, from other places, is that Actian has a good product that people interested in Hadoop should evaluate, but seeing this presentation was too little said in too little time with too many words.

In Q&A, Emma McGrattan also made what I think is a mistake, one that I’ve heard many BI companies get away from in the last few years. An attendee asked about biggest concern when transitioning from EDW to Hadoop. The real response should be that Hadoop doesn’t replace the EDW. Hadoop extends the information architecture, it can even be used to put an EDW on open source, but EDWs and big data analytics typically have two different purposes. EDWs are for clean, trusted data that’s not as volatile, while big data is typically transaction oriented information that needs to be cleaned, analyzed and aggregated before it’s useful in and EDW. They are two tools in the BI toolbox. Unfortunately, Ms. McGrattan accepted the premise.

Embarcadero

Mr. Huizenga, from Embarcadero, referred to evidence that the amount of data captured in business is doubling every 1.2 years and how the number of related jobs is also exploding. However, where most big data and Hadoop vendors would then talk about their technologies manipulating and analyzing the data, he started with a bigger issue: How do you begin to understand and model the information? After all, schema-on-write still means you need to understand the information enough to create schemas.

That led to a very smooth shift to a discussion about the concept of modeling to Embarcadero. They’ve added native support for Hive and MongoDB, they can detect embedded objects in those schemas and they can visually translate the Hadoop information into forms that enterprise IT folks are used to seeing, can understand and can add to their overall architecture models.

Big data doesn’t exist in a void, to be successful it must be integrated fully into the enterprise information architecture. For those folks already using ERwin and those who understand the need to document modeling, they are a tool that should be investigated for the world of Hadoop.

Summary

Three good companies were crammed into a tiny time slot with differing success. The title of the seminar suggested a tie that was stronger than was there. The makings existed for three good webinars, and I wish DBTA had done that. The three firms and the host could have communicated to create an overall message that integrated the three solutions, but they didn’t.

If you didn’t see the presentation, don’t bother. Whichever company interests you check it out. All three are interesting though it might have been hard to tell from this webinar.

TDWI Best Practices Report on Hadoop: A good report for IT, not executives

The latest TDWI Best Practices Report is concerned with Hadoop. Philip Russom is the author and the article is worth a read. However, it has the usual issue I’ve seen with many TDWI reports, very strong on numbers but missing the real business point. In journalism, there’s an expression called burying the lede, hiding the most important part of a story down in the middle. Mr. Russom gets his analysis correct, bit I think the priorities or the focus needs work. It’s a great report to use as a source by IT, it’s not a report for executives.

Why am I cranky? The report starts with an Executive Summary. The problem is that it isn’t aimed at executives but is something that lets technical folks think they’re doing well. It doesn’t tell executives why they should care. What are the business benefits? What are the risks? Those things are missing.

First, let’s deal with the humorous marketing number. The report mentions the supposedly astounding figure that “Hadoop clusters in production are up 60% in two years.” That’s part of the executive summary. You have to slide down into the body to understand that only 16% of respondents said they have HDFS production. It’s easy for early adopters to grow a small percent to a slightly larger small percentage, it’s much tougher to get a larger slice of the pie.

Philip Russom accurately deals with why it will take a bit for Hadoop to grow larger, but it does it past the halfway point of the article. Two things: Security and SQL.

Executives are concerned that technology helps business. Security ensures that intellectual property remains within the firm. It also ensures that litigation is minimized by not having breaches that could be outside regulatory and contractual requirements. Mr. Russom accurately discusses the security risks with Hadoop, but that begins down on page 18 and doesn’t bubble up into the executive summary.

So too is the issue of SQL. After writing about the problems in staffing Hadoop, the author gives a brief but accurate mention of the need to link Hadoop into the rest of a business’ information infrastructure. It is happening, as a sidebar comment points out with “Hadoop is progressively integrated into complex multi-platform environments.” However, that progress needs to speed up for executives to see the analytics from Hadoop data integrated into the big picture the CxO suite demands.

The report gives IT a great picture of where Hadoop is right now. As expected from a technical organization, it weighs the need, influence and future of the mystical data scientist too highly, but the generalities are there to help mid-level management understand where Hadoop is today.

However, I’ve seen multiple generations of technology come in, and Hadoop is still at an early adopter phase where too many proponents are too technical to understand what executives need. It’s important to understand risks and rewards, not a technical snapshot; and the later is what the report is.

IT should read this report as valuable insight to what the market is doing. It’s, obviously, my personal bias, but the summary is just that, a summary. It’s not for executives. It’s something that each IT manager will use for its good resources to build their own messages to their executives.

Datameer and Altiscale: A Tale of Two Startups

A webinar I watched was titled for the announcement of Datameer Professional, but that’s not what caught my interest. I previously blogged about Datameer’s presentation to the BBBT, so you can see where I think they’re a good, basic company who seemed to have a better grasp on the market than most startups. Sadly, that wasn’t shown today. What was most interesting was the part of the presentation that covered Altiscale, a company partnering with Datameer to provide Datameer Professional.

The Basics

The presentation was a tag team between Stefan Groschupf, CEO of Datameer, and Mike Maciag, COO of Altiscale.

It began with Stefan giving a very generic overview of the market. There was little content and that which was there wasn’t a surprise. The main point is that the demand for Hadoop has moved from IT doing behind the scenes work to business users wanting quick analysis. The lack of technical knowledge makes staffing an issue.

That led directly to Mike Maciag talking about how Altiscale provides Hadoop as a service. They’re a cloud provider of Hadoop. With founders from Google, Yahoo and LinkedIn, they understand Hadoop, the cloud and the need of companies to quickly leverage external resources to get to Hadoop with better TCO than would be the case trying to build in-house.

The reason for that quick introduction became apparent as soon as Mike tossed back to Stefan. Datameer Professional is a cloud version of their product that runs on Altiscale in the US. During Q&A, it come out that they’re using a different, unnamed provider in the EU for compliance issues over there.

One feature is they’re claiming they’ll provide three sets of basic analytics with Professional, for customer analysis, operations and cyber security – in that order. However, there was the qualification from Mr. Groschupf of “over the next few months,” so no clue how much is there now or when they’re really be available.

Case Studies

One thing I noticed placed a clear difference between the two companies as for how far they understand business customers. The Datameer customers mentioned were never named. None are approved. Therefore, I have to question the veracity of claims.

The case study presented by Altisoft was a named client. Business users want to know reality and also want to confirm that a customer has enough confidence in a vendor to attach a name.

Summary

The summary combines what I heard today and what I heard at the BBBT presentation.

Datameer is a good startup, but is still focused on technology. BI has a short life cycle and companies need to rapidly prepare for the chasm. As they showed no updates in their UI, don’t have strong market messages and didn’t have a referenced customer, Datameer is a company to look at for technology but I again wonder about the long term strategy and think the technology will be acquisition bait. Look to them for a basic interface, strong underpinnings to they don’t seem focused clearly on end-user business messages.

Altiscale is also a good startup, but they seem better focused on a business message. Perhaps that’s because they are a cloud service company so they know it is important to do so. From the little I saw, they seem to be positioning themselves well as an alternative to Amazon and other choices to help business quickly take advantage of big data on Hadoop. I’d like to hear more.

Both Datameer and Altiscale are worth looking at, but for different reasons and they don’t have to be a set.

MapR at BBBT: Supporting Hadoop and still learning

I’ve probably used this in other columns, but that’s life. MapR’s presentation to the BBBT reminded me of Yogi Berra’s statement that it feels like déjà vu all over again. Wait, if I think I’ve done this before, am I stuck in a déjà vu loop?

The presentation was a tag team effort of Steve Wooledge, VP Product Marketing, and Tomer Shiran, VP Product Management.

The Products and Their Aim

The first part of the déjà vu was good. People love to talk about freeware, but mission critical solution won’t be trusted on such. Even before Linux, before Unix, software came out and it took companies to package it with service and support to provide constancy and trust for widespread IT adoption. MapR is a key company doing that with Apache Hadoop, the primary open source technology for big data applications.

They’ve done the job well, putting together a strong company that, quite reasonably, has attracted some great investors and customers. Of course, because Hadoop is still in its infancy, even a leading company such as MapR only mentions 700 customer, companies paying for licenses; but that’s a statement about big data’s still fairly limited impact in operational systems not a knock on MapR.

Their vision statement is simple: “Empowering the As-it-happens business by speeding up the data-to-action cycle.” Note the key: Hadoop is batch oriented and all the players realize that real-time analysis matters for some key sales and marketing applications. Companies are now focusing on how fast they can get information out of the databases, not what it takes to get data in. A smart move but only half the equation.

One key part of the move to package open source into something trusted was pointed out by Steve Wooledge. When the company polled customers about why they chose MapR, the largest response was availability, the up time of the system. Better performance wasn’t far behind, but it’s clear that the company understands that availability is a critical business issue and they seem to be addressing it well.

Where the déjà vu hits in a not-so-positive way is the regular refrain of technologists still not quite getting business – even when they try. This isn’t a technology problem but an innovator’s problem. When you get so wrapped up in the cool things you’re doing, you think that you need to lead with the cool things, not necessarily what the market wants.

One example was when they were describing the complexity of the MapR packaging. Almost all the focus was on the cool buzzwords of open source. Almost lost in the mix was the mention that their software supports NFS. It was developed more than 30 years ago and helps find files on networks. That MapR helps link both the latest and the still voluminous data in existing file systems is a key point, something that can help businesses understand that Hadoop can be integrated into existing systems and infrastructure. However, it’s not cool so the information is buried.

The final thing I’ll mention about the existing products is that MapR has built a nice three product suite, providing open source, mid-tier and full enterprise versions. That’s the perfect way to address the open source conundrum and move folks along the customer curve.

Apache Drill: Has it Bitten Off Too Much?

Sorry, couldn’t help the drill bit reference. Tomer Shiran took the later part of the presentation to show off Apache’s latest data toy, Apache Drill, intended to bridge the two worlds of data. The problem I saw was one not limited to Tomer, MapR or even Apache, but to all folks with with what they think of as new technology: Over hype and an addiction to revolutionary rather than evolutionary words and messages. There were far too many phrases that denigrated IT and existing technology and implied Drill would replace things that weren’t needed. When questioned, Tomer admitted that it’s a compliment; but the unthinking words of many folks in the industry set out a pattern inimical to rapid adoption into the Global 1000’s critical information paths.

Backing up that was a reply given to one questioner: ““CIO of one of the largest tech companies said they can’t keep doing things the same way.” Tech companies tend to be bleeding edge by nature, they do not represent the fuller business world. More importantly, the idea that a CIO saying she needs to change doesn’t mean the CIO is planning on throwing out existing tools that work. It means she wants to expand and extend in a way to leverage all technology to provide better decision making capabilities to the rest of the CxO suite.

Another area of his talk finally brought forward, through a very robust discussion, of one terminology issue that many are having. Big data folks like to talk about “no schema” but that’s not really true. Even when they modify the statement to be “schema on read” it’s missing the point.

They seem to be confusing fixed layout, relational records with the theory of schemas. XML is a schema for data exchange. It’s very flexible and can be self-defined, but it’s a schema. As it came from SGML, it’s not even the first iteration of flexible schemas. The example Mr. Tomer gave was just like an XML schema. Both data source and data recipient have to know some basic information such as field names in order to make sense of data, so there’s a schema.

Flexible schemas not only aren’t new, they don’t obviate the need for flexible schemas. They’re just another technique for managing the wide variety of data that business wishes to turn into information. As long as big data folks misusing a term and acting as if they have something revolutionary, the longer they’ll retard their needed incursion into IT and business information.

Summary

Hadoop and big data aren’t going anywhere except forward. The question is at what speed. There are some great things happening in both the Apache open source world and MapR’s licensed support for that world, but the lack of understanding of existing IT and business is retarding adoption of the new and exciting technologies.

When statements such as “But the sales guy won’t do X” are used by folks who have never been in and don’t understand sales, they’re missing the market. Today’s sales person is looking for faster and more accurate information, and is using many tools people would have said the same thing about only ten years earlier. In the meantime, sales management and the CxO suite who provide guidance for the sales force are even more interested in big picture information coming from massaging large data sources.

The folks in the new arenas such as Hadoop need to realize that they are complementary to existing technologies and that can help both IT and business. When pointing that out, I was asked by one of the presenters if that meant he should do two case studies, one with Hadoop, flexible schema and one with old line uses, I gave a clear no. It should be one with new and one that shows new and existing data sources combining to give management a more holistic picture than previously possible.

Evolution is good. MapR can help. They need to do the tough part of technology and more their view from what they think is cool to what the market thinks is needed.

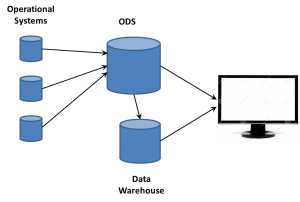

An ODS by any other name still smells like data

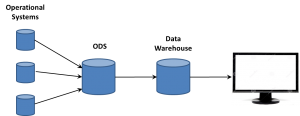

Data warehouse theory originally posited extracting data from systems, performing transformations on them and loading the resulting schemas into the data warehouse. It was a straight flow of information. However, the difference between theory and practice quickly reared its head. Today, people are talking about Data Lakes and Data Swamps. They’re not new, they’re just the ODS updated for modern data.

Data Warehouses and the ODS

Academics don’t have to deal with operational systems. In the 1980s and 1990s, those systems were growing, with ERP, CRM and other systems increasing the complexity and volume of data. Those mission critical systems, however, weren’t designed for extraction of information. They were primarily running on RDBMS systems that had locking schemas that could grind process and transactional systems to a halt while and extraction program kept open large blocks of records while transforming basic data in star schemas. Something needed to be done.

There was also a secondary effect that was very important to some people. IT, just as with ever other department in a large enterprise, isn’t monolithic. The people managing the operational systems knew their systems were mission critical and also knew how, in reality, those systems were big but fragile. They weren’t happy with opening their operational systems to other IT folks who were interested in non-operational things. Those folks answering other business problems? They were viewed as intruders, getting in the way of the “real work.”

For both reasons, intrusions into the operational systems were something to be kept to a minimum. IT organizations began using an Operational Data Store (ODS) to quickly open the operational systems, suck all the data out, willy-nilly (yes, I decided to use that term in a tech article…), and then go back to prime performance in an isolated system.

It was then the ODS that was the source of the data warehouse ETL process. On a tangent, this is why the people now arguing about ETL v ELT amuse me. It’s been ELETL for decade, if we want to be honest; but who cares? I’d rather have a BLT than spend so many cycles over slightly different acronyms for concepts that ETL handily describes, even in permutations.

The ODS comes into its own

The IT folks who were working to provide reports for mid- and high-level managers were always trying to tweak enterprise software reports, trying to extract nuggets of value. The data warehouse was a step forward and helped build a bigger picture. However, the creation of star schemas and other DW techniques aggregated data and lots a lot of detail. A manager would see an issue and want to backtrack, to drill-down into the data to know more.

The ODS became the way to do so. Very quickly, the focus changed from ODS in front of the data warehouse to both working side-by-side. Having all that raw data available gave the business analysts a way of providing much more detail and information to the business user. The first big BI companies, those such as Cognos, Business Objects and more, leveraged the two data stores to provide an ability to drill down past the aggregate information into the more detailed data.

Having that large volume of data from multiple operational systems also intrigued people who weren’t data warehouse focused. They wanted to sift the raw data for technical or performance trends, things that weren’t of interest to the typical DW designers and users, but were important to mid-level management in manufacturing, marketing and other departments. Business analysts supporting those people began to turn to more and more analysis directly on the ODS data

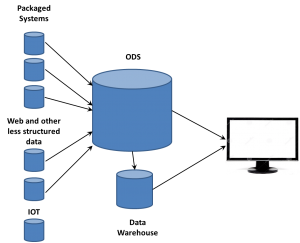

The ODS comes to the fore – by another name

That was happening in the 1990s, at the same time another key phenomenon was growing: The Web. The growth of the web meant a lot more data about a lot more things. Web sites are operational systems to marketing in just as critical a way as an assembly line is to manufacturing. People became interested in ensuring that what visitors to web sites did was captured and available for analysis. However, as the volume of web traffic grew exponentially, new issues had to be looked at to handle that data.

Columnar databases were one solution, a way to speed up analysis of dimensions of information across individual records. The vastly larger amount of data also helped push emerging MPP technologies and drove creation of Hadoop and other technologies that could manage much larger data sources much faster and more cost efficiently than could individual Unix servers.

However, the web folks were new to IT and grew up in a different generation than the folks who designed and drove data warehousing. It’s natural to  want to take ownership of concepts, especially those on the edge. So the folks working with these new data sources began talking about Big Data as somehow completely different than what came before. If that was the case, they needed to think of some term for the database where they dumped all the data extracted from web sites. Data Lakes became one term. We’ve heard data swamp and other attempts to create unique terms so a company can differentiate itself from others. However, there’s already a name.

want to take ownership of concepts, especially those on the edge. So the folks working with these new data sources began talking about Big Data as somehow completely different than what came before. If that was the case, they needed to think of some term for the database where they dumped all the data extracted from web sites. Data Lakes became one term. We’ve heard data swamp and other attempts to create unique terms so a company can differentiate itself from others. However, there’s already a name.

The ODS exists. It’s evolved. It’s moved forward. But it’s still the ODS.

Yes, really

“But,” you say, “an ODS is operational information and the data lake is so much more!” Well, not quite. There are two main problems with that argument.

First, times change. When the ODS was coined, the focus was on the back-end systems such as ERP, CRM, accounting and other fairly closed systems. It was before the web, before the ubiquity of mobile devices, before the wall between back-end and customer-facing systems was destroyed.

As mentioned, not just web sites are but even the internet is an operational system for your business – and not just for ecommerce companies. From lead generation, to maintenance and training, the internet is a key tool for providing operational support and generating business critical operational details.

Second, just as ETL can mean a number of things, so can ODS extend past a pure theory while still being relevant. CRM systems are considered operational but still contained sentiment and other information in comments fields. Just so, the vast volume of data from a call center’s voice recording system being dumped into the ODS have two components. There are basic details about the operation of the call center, things such as number of calls, call length and other details that are purely operational. There are also additional details about customers that can be distilled for strategy purposes, including the ability to provide sentiment analysis. Just because an operational system captures data that can be used for more than purely operational decision making doesn’t obviate that the information extracted resided in an ODS.

Summary

It’s a need of information technologists from all generations to realize that things change but retain context. The ODS isn’t what it was thirty years ago, but the data lake also isn’t some new creation born full blown from the web. There are few truly revolutionary technologies. You can be a brilliant person and contribute much to technology and business and still not be a revolutionary.

The ability to manage the vastly larger amounts of data than we had twenty years ago is critical. There are many innovative things being done. However, I consider the first expert systems, the first MPP algorithms and other similar technologies to be revolutionary. The fact that what is being done to allow business to gain insight combining more and more data from even more diverse sources is no less valuable to the industry because it is instead an evolutionary change.

The ODS has evolved. It doesn’t need a new name, just a tad more respect.

SQL v Hadoop: The Wrong Conversation

“No SQL!”

“Hadoop doesn’t require you to work in SQL!”

The claims are everywhere, but do they mean anything? To ruin the suspense: No.

There seems to be a big misunderstanding or a big lack of communications in the realm of big data. I keep hearing company after company compare Hadoop to SQL, claiming the former is somehow better than the later. Sadly, that’s comparing apples to screwdrivers.

Hadoop is a database technology. It’s based on MPP architecture for the Cloud. Hadoop compares to flat files, relational databases and other methods for storing information in structures.

SQL is an query language. It’s similar to an API in that it’s just a way to communicate with the data source. Long ago, in the dawn of time, SQL was tightly tied to DB2 and the relational environment that spawned the syntax. However, along came the 1980s, Unix servers and PCs, and the need to access lots of different data sources and an unwillingness to have to have very separate query languages for each data source.

Along came ODBC to the rescue. It standardized core query syntax using the SQL paradigm and allowed, under the covers, the ODBC developer to use an API to translate almost standard queries into the language of each data source. It extended SQL to access new things.

In the meantime, as RDBMS technologies began to try to find ways around the basic limitations of relational databases, the companies added extra features such as stored procedures that extended SQL even further from the origins of basic definition and query of relational structures.

So now we have a mass of coders who have only worked with large, primarily Web oriented databases using non-RDBMS technology. No surprise, they had to code their own interfaces and queries, getting into the details of the newer systems. At the same time, they probably brushed through and overview of RDBMS and SQL in school and then never used it again.

That meant a misunderstanding of the difference between database and query. Therefore, the message of No SQL will retard their progress in integrating their solutions with the existing IT data infrastructure.

There’s a large need for people who can work with Hadoop and other younger data sources. There’s also a vast pool of people who know SQL. Yes, there will always be a need for Hadoop gurus just as there is for every technology, but the folks wanting to get information out of data sources don’t need to know the data sources, they need to get the information – and they know SQL.

A number of vendors have figured that out and are now offering SQL as a means to access Hadoop. It’s a natural fit, an extension of what the people pushing Hadoop are hoping to achieve. Hadoop and other distributed, non-row based architectures are there to expand knowledge. They’re great ways to better understand the vast body of data coming in from many new sources. However, until you can get that data to the business knowledge worker, it’s not information. SQL is the clearest way to quickly bridge that gap.

The people who realize that it’s not an either/or decision, who understand that Hadoop and SQL not only can but should work together are the people who will drive their companies forward by quickly addressing real business needs.

SQL v Hadoop is the wrong conversation. SQL and Hadoop is the right one.

Splunk at BBBT: Messages Need to Evolve Too

Our presenters last Friday at the BBBT were Brett Sheppard and Manish Jiandani from Splunk. The company was founded on understanding machine data and the presentation was full of that phrase and focus. However, machine data has a specific meaning and that’s not what Splunk does today. They speak about operational intelligence but the message needs to bubble up and take over.

Splunk has been public since 2012 and has over 1200 employees, something not many people realize. They were founded in 2004 to address the growing amount of machine data and the main goal the presenters showed is to “Make machine data accessible, usable and valuable to everyone.”

However, their presentation focused on Splunk’s ability to access IVR (Interactive Voice Recorder) and twitter transcripts and that’s not machine data. When questioned, they pointed out that they don’t do semantic analysis but focus on the timestamp and other machine generated data to understand operational flow. Still, while you might stretch and call that machine data, they did display doing some very simple analytics on the occurrence of keywords in text and that’s not it.

It’s clear that Splunk has successfully moved past pure machine data into a more robust operational intelligence solution. However, being techies from the Bay Area, it seems they still have their focus on the technology and its origins. They’re now pulling information from sources other than just machines, but are primarily analyzing the context of that information. As Suzanne Hoffman (@revenuemaven), another BBBT member analyst, pointed out during the presentation, they’re focused on the metadata associated with operational data and how to use that metadata to better understand operational processes.

Their demo was typical, nothing great but all the pieces there. The visualizations are simple and clear while they claim to be accessible to BI vendors for better analytics. However, note that they have a proprietary database and provide access through ODBC and an API. Mileage may vary.

There was also a confusing message in the claim that they’re not optimized for structured data. Machine data is structured. While it often doesn’t have clear field boundaries, there’s a clear structure and simple parsing lets you know what the fields and data are in the stream. What they really mean is it’s not optimal for RDBMS data. They suggest that you integrate Splunk and relational data downstream via a BI tool. That makes sense, but again they need to clarify and expose that information in a better way.

And then there’s the messaging nit. While talking about business as my main focus, technology presented with the incorrect words jars the educated audience. Splunk is not the first company nor will it, sadly, be the last, to have people who are confused about the difference between “premise” and “premises.” However, usually it’s only one person in a presentation. The slides and both presenters showed a corporate confusion that leads me to the premise that they’re not aware of how to properly present the difference between Cloud and on-premises solutions.

Hunk: On the Hadoop Bandwagon

Another messaging issue was the repeated mention of Hunk without an explanation. Only later in the presentation, they focused on it. Hunk’s their product to put the Splunk Enterprise technology on a Hadoop database. Let me be clear, it’s not just accessing Hadoop information for analysis but moving the storage from their proprietary system to Hadoop.

This is a smart move and helps address those customers who are heavily invested in Hadoop and, at least at the presentation level, they have a strong message about having the same functionality as in their core product, just residing on a different technology.

Note that this is not just helping the customer, it helps Splunk scale their own database in order to reach a wider range of customers. It’s a smart business move.

Security, Call Centers and Changing the Focus

The focus of their business message and a large group of customer slides is, no surprise, on network security and call center performance. The ability to look at the large amount of data and provide analysis of security anomalies means that Splunk is in the Gartner Magic Quadrant for SIEM (Security Information and Event Management).

In addition, IVR was mentioned earlier. That combined with other call center data allows Splunk to provide information that helps companies better understand and improve call center effectiveness. It’s a nice bridge from pure machine data to a more full featured data analysis.

That difference was shown by what I thought was the most enlightening customer slide, one about Tesco. For my primarily US readers, Tesco is a major grocery chain, with divisions focused on everything from the corner market to supermarkets. They are headquartered in England, are the major player in Europe and the second largest retailer by profit after Walmart.

As described, Tesco began using Splunk to analyze network and website performance, focused on the purely machine data concerns for performance. As they saw the benefit of the product to more areas, they expanded to customer revenue, online shopping cart data and other higher level business functions for analysis and improvement.

Summary

Splunk is a robust and growing company focused on providing operational intelligence. Unfortunately, their messaging is lagging their business. They still focus on machine data as the core message because that was their technical and business focus in the last decade. I have no doubts that they’ll keep growing, but a better clarification of their strategy, priorities and messages will help a wider market more quickly understand their benefits.