Data Lakes, the renamed ODS, aren’t the only solution for accessing data. Think actual need, understand supporting metadata, then build your data ingestion plan. Read my latest TechTarget column.

Tag Archives: teich communications

BI Buzzwords for business management: Self-service and machine learning briefly explained

I’ve seen a few company webinars recently. As I have serious problems with their marketing, but don’t wish that to imply a problem with technology, this post will discuss the issues while leaving the companies anonymous.

What matters is letting business decision makers separate the hype from what they really need to look at when investigating products. I’m in marketing and would never deny its importance, but there’s a fine line between good marketing and misrepresentation, and that line is both subjective and fuzzy.

As the title suggests, I’ll discuss the line by describing my views of two buzzwords in business intelligence (BI). The first has been used for years, and I’ve talked about it before, it’s the concept of self-service BI. The second is the fairly new and rapidly increasing use of the word “machine” in marketing

Self-Service Still Isn’t

As I discussed in more detail in a TechTarget article, BI vendors regularly claim self-service when software isn’t. While advances in technology and user interface design are rapidly approaching full self-service for business analysts, the term is usually directed at business users. That’s just not true.

I’ve seen a couple of recent presentations that have that message strewn throughout the webinars, but the demonstrations show anything but that capability. The linking of data still requires more expertise that the typical business user needs. Even worse, some vendors limit things further. The analysts still create basic reports and templates, within which business people can wander with a bit of freedom. Though self-service is claimed, I don’t consider that to approach self-service.

The result is that some companies provide a limited self-service within the specified data set, a self-service that strongly limits discovery.

As mentioned, that self-service is either misunderstood or over promised doesn’t obviate that the technology still allows customers to gain far more insight than they could even five years ago. The key is to take the promises with a grain of salt.

When you see it, ignore the phrase “self-service.”

Prospective BI buyers need to focus on whether or not the current state of the art presents enough advantages over existing corporate methodologies to provide proper ROI. That means you should evaluate vendors based on specific improvements on your existing analytics and the products should be rigorously tested against your own needs and your team’s expertise.

Machine

Machine learning, to be discussed shortly, has exploded in usage throughout the software industry. What I recently saw, from one BI vendor, was a fun little marketing ploy to leverage that without lying. That combination is the heart of marketing and, IMO, differs from the nonsense about self-service.

Throughout the webinar, the presenter referred to the platform as “the machine.” Well, true. Babbage’s machines were analytic engines, the precursors to our computers, so complex software can reasonable be viewed as a machine. The usage brings to mind the concept of machine learning while clearly claiming it’s not.

That’s the difference, self-service states something the products aren’t while machine might vaguely bring to mind machine learning but does not directly imply that. I am both amused and impressed by that usage. Bravo!

Machine Learning and Natural Language Processing

This phrase needs a larger article, one I’m working on, but I would be remiss to not mention it here. The two previous sections do imply how machine learning could solve the self-service problem.

First, what’s machine learning? No, it’s not complex analytics. Expert systems (ES) are a segment of artificial intelligence focused on machines which can learn new things. Current analytics can use very complex algorithms, but they just drive user insight rather than provide their own.

Machine learning is the ability for the program to learn new things and to even add code that changes algorithms and data as it learns. A question to an expert system has one answer the first time, and a different answer as it learns from the mistakes in the first response.

Natural Language Processing (NLP) is more obvious. It’s the evolving understanding of how we speak, type and communicate using language. The advances have meant an improved ability for software to responds to people without clicking on lots of parameters to set search. The goal is to allow people to type or speak queries and for the ES to then respond with information at the business level.

The hope I have is that the blend will allow IT to set up systems that can learn the data structures in a company and basic queries that might be asked. That will then allow business users to ask questions in a non-technical manner and receive information in return.

Today, business analysts have to directly set up dashboards, templates and other tools that are directly used by business, often requiring too much technical knowledge. When a business person has a new idea, it has to go back to a slow cycle where the analyst has to hook in more data, at new templates and more.

When the business analyst can focus on teaching the ES where data is, what data is and the basics of business analysis, the ES can focus on providing a more adaptable and non-technical interface to the business community.

Machine learning, i.e. expert systems, and NLP are what will lead to truly self-service business applications. They’re not here yet, but they are on the horizon.

IoT and Business Intelligence

My latest article on Tech Target is about how Business Intelligence and the Internet of Things (IoT) overlap.

DBTA Webinar: Too many cooks, yet again

Sadly, DBTA is becoming known for taking interesting companies, putting them in a blender and having each lose their message. A recent webinar included Cask, Attunity and HPE Security – all in a one hour time slot – again shows the problem. It was a mess.

Cask is a young Hadoop company with an interesting opportunity (Disclosure: As I’m discussing marketing, I need to mention I recently interviewed for a position at Cask). The company is working to put wrappers around Hadoop code to make it easier for IT to use the data platform. One of their products is Cask Hydrator, to help populate the database. That begins to move the message of Hadoop out of the early adopter phase and into a business message, but the presentation was still far to technical.

Attunity then presented and a key point was that they make data ingest easy. If that sounds like a similar message to Cask’s, you’re right. Why the two were together on the webinar when much of what they said sounded like competition wasn’t clear. On the good side, Attunity did a far better job at presenting a business message, both in how the presenter talked about the products and in which case studies were used.

HPE Security made another appearance, tacked onto the end of a presentation. Data security is critical, and HP has put together a very good message on it, but it didn’t vaguely fit the tone and arena of the previous presenters.

When Companies Should Share a Stage

The smaller companies seem to have a problem. It’s simple: Their involvement in webinars might be driven by marketing, but it’s being controlled by bean counters. Each of the three companies had something good to say, and each should have taken the time to say it in a stand-alone webinar. However, sharing costs was made to be the primary issue and so the mess ensued.

When should firms share the spotlight? That should happen when the item missing from the top of my presentation is there. The missing piece is having a joint story to tell. None of the case studies mentioned the companies working in partnership. None. When multiple vendors work to provide a complete solution to a client, even if the vendors might sometime compete, there’s a strong case for multiple companies in a webinar.

This webinar was not that. It was companies not feeling strongly enough about themselves for the other executives to overrule the COO’s or CFO’s and push a solid webinar about themselves.

All of these companies are worth looking at within the big data arena, just not in such a forced together setting. Stand on your own or show a joint project.

What Makes Business Intelligence “Enterprise”?

I have an article in the Spring TDWI Journal. It has now been six months and the organization has been kind enough to provide me with a copy of my article to use on my site: TDWI_BIJV21N1_Teich.

If you like my article, and I know you will, check out the full journal.

Storytelling as evolution, not revolution

My latest Tech Target column is on storytelling.

Webinar Review: Oracle Big Data Cloud, Understanding Business

People at technology startups love to call the industry giants dinosaurs. The analogy fails for a number of reasons. The funniest is that the dinosaurs existed for many millions of years. As the large companies exist now, are the startups are saying the big companies will only disappear if we’re hit by a meteor? Companies became large by filling a need. While many might not be as nimble, their experience, especially in enterprise software, means they often see the needs of the business community while the small companies are focused too much on their “cool” technology.

This week’s Oracle webinar, hosted by the DBTA, was a good example of that. The speakers were Rich Clayton, VP Business Analytics Product Group, and Omri Traub, VP Software Development, and the subject was, no surprise, Oracle Big Data Cloud Service (OBDC. Yeah, I know. Too close to ODBC…). Before we get into the details, people need to be aware that Oracle is fully committed to the cloud, as pointed out in a recent advertorial in Forbes. Oracle is clearly competing with Amazon for enterprise cloud business. Big data is only one part of that.

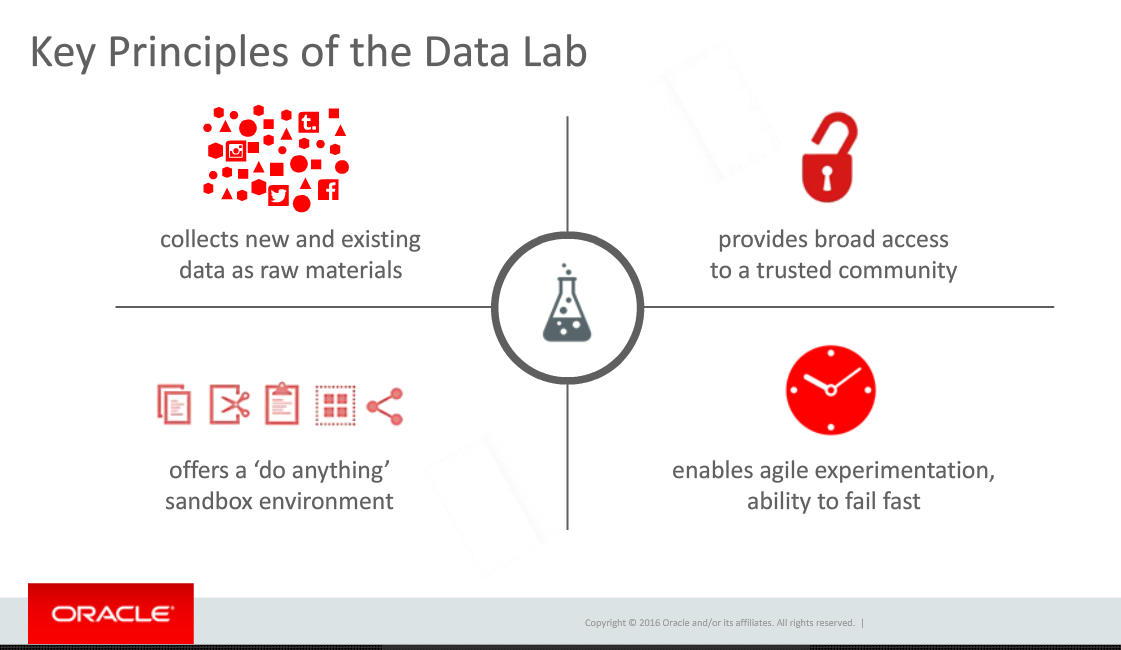

Rich Clayton began the presentation by pointing towards Thomas Edison’s laboratory as an example of using the ideas from many people to not only invent things but also to figure out how to market those inventions. He brought that directly into the evolution of corporate data labs. The biggest problem, Rich stated, is that that labs are usually only populated by very technical people while they require a broader array of talents. That requirement is one of the data labs principles he defined and one I’ve also described as the missing component of many corporate data labs.

A related problem is that most products are so complex and silo’d that very technical people are needed. At this stage in business intelligence and big data, that’s the horse that needs to be addressed before the broad access cart can move.

Omri Traub then took over for the demonstration portion of the presentation. Unfortunately, he unintentionally proved the point about technical folks missing business needs by the setup he used for the demonstration. The demo was built around an enormous amount of information on New York City taxi information. While manipulating a billion record data set is cool and powerful, he never presented a business message. He pointed to the large volume of data, talked about other data sources he combined, and then played with the data to show correlations.

The problem? Omri, claimed we were gaining insight. Correlations aren’t insight. Understanding how those correlations might impact your business and ideas how to adapt business to meet what you find is insight. Nothing in the demonstration pointed towards insight.

Fortunately, Rich Clayton earlier had given a couple of case studies showing business insight gained by OBDC early customers. It would have been much better if Mr. Traub had focused on one of those cases or something similar.

The best point of the demonstration was when Omri showed how, in the middle of playing with some relationships, he easily incorporated some analysis created by a different person. As mentioned above, collaboration is critical and it looks like Oracle hasn’t limited that to just a marketing message but has worked to make sure that Oracle’s product helps the team. As many companies claim to do that and it was only an overview, your mileage might vary. Make sure when you talk to them to follow through and see whether the collaboration (not to mention the entire product…) meets your needs.

The final section was the Q&A. I’m a marketing person, so I have to be honest and state that it sounded like canned questions they wanted to address, as there was way too much about the full Oracle ecosystem brought into discussion at this point compared to what I’d expect from customers. Still, there was one important point.

A question was asked about what advanced analytics might be added. Mr. Taub had the perfect response. After quickly mentioning that, yes, Oracle was always looking at advanced analytics and how to add them, he made a much more important point. Collobaration is key and OBDC is designed to get business people involved. All analytics need to be added in a usable manner, in a way that is understandable and can be leveraged by more people than just the technical resources.

That is the critical viewpoint that a large, enterprise focused company can bring to BI, the cloud and big data. That’s why it’s foolish to write off the large companies, the ones with expertise in not just technology, but in business and business relationships. They might not move as fast, but they can move to the right places with the right products and the right business messages.

DBTA Webinar Review: Leveraging Big Data with Hadoop, NoSQL and RDBMS

A presentation last week, hosted by Database Trends and Applications (DBTA), was a great example of some interesting technical information presented poorly. As that sentence implies, this column is one about the marketing of business intelligence (BI), not about the technology – well, not much…

There were three presenters: Brian Bulkowski, CTO and Co-founder, Aerospike; Kevin Petrie, Senior Director and Technology Evangelist, Attunity; Reiner Kappenberger, Global Product Management, HPE Security – Data Security.

Aerospike

Brian was first at the podium. Aerospike is a company providing what they claim is a very high speed, scalable database, proudly advertising “NoSQL!” The problem they have is that they are one of many companies still confused about the difference between databases and SQL. A database is not the access method. What they’re really focused on in loosely structured data, the same way Hadoop and other newer databases are aimed. That doesn’t obviate the need to communicate via SQL.

He also said that the operational in-memory market is “owned by NoSQL.” However, there were no numbers. Standard RDBMS’s, columnar and NoSQL databases all are providing in-memory storage and processing. In fact, Information Management has a slide show of Gartner’s database analytics vendor report and you can see the breadth there. In addition, what I constantly hear (not statistically significant either…) is that Hadoop and other loosely-structured databases are still primarily for batch. However, as the slide show I just mentioned is in alphabetical order, and Aerospike is the first one you’ll see. Note again that I’m pointing out flaws in the marketing message, not the products. They could have a great in-memory solution, but that’s doesn’t mean NoSQL is the only NoSQL option.

The final key marketing issue is that he kept misusing “transactional.” He continued to talk about RDMS’s as transactional systems even while he talked about the power of Aerospike for better handling the transactions. In the later portion of his presentation, he was trying to say that RDBMS’s still had a place, but he was using the wrong term.

Attunity

Attunity’s Kevin Petrie was second and his focus was on Attunity Replicate. The team of Aerospike and Attunity again shows the market isn’t yet mature enough to have ETL and databases come smoothly together. Kevin talked about their 35 sources and it seem that they are the front end in the marketing paring of the two companies. If you really need heterogeneous data sources and large database manipulation, you’ll need to look at the pair of companies.

My key issue with this section was one of enterprise priorities. Perhaps the one big, anonymous reference they both discussed drove the webinar, but it shouldn’t have owned the message. Mr. Petrie spent almost all his time talking about Hadoop, MongoDB and Kafka. Those are still bleeding edge tools while enterprise adoption requires a focus on integrating with standard and existing sources. Only at the end, his third anonymous case, did Kevin have a slide that mentioned RDBMS sources. If he wants to keep talking with people running experimental and leading edge tests of systems, that priority makes sense. If he wishes to talk to the larger enterprise market, he needs to turn things around.

The other issue was a slide that equated RDBMS, Data Warehouse and Hadoop as being on equal footing. There he shows a lack of business knowledge. The EDW, as an old TV would declare, is the one of these things that is not like the other. It has a very different purpose from the two database technologies and isn’t technology dependent.

HPE Security

Reiner Kappenberger gave a great presentation but it didn’t belong. It seems the smaller two firms were happy to get HP to help with the financing but they didn’t think about staying on message.

Let me make it very clear: Security is of critical importance. What Mr. Kappenberger had to say was very important for people to hear. However, it didn’t belong in this webinar. The topic didn’t fit and working to stuff three presenters into forty minutes is always tough. Another presentation where all three talked about how they work to ensure that the large volumes of data can be secure at multiple levels would have been great to hear – and I hope the three choose to create such a webinar.

Summary

This was two different webinars stuffed into one, blurring the message. In addition, Aerospike and Affinity either need to make sure they they’re not yet trying to address the mass market or they need to learn how to stop speaking to each other and other leading edge people and begin to better address the wider enterprise market.

The unnamed reference seemed to be a company that needed help with credit card transactions and fraud detection, and all three companies worked to provide a full solution. However, from a marketing standpoint I don’t think they did proper service to their project by this webinar.

SQL v NoSQL: It’s not really a fight

My latest Tech Target article is up at http://searchdatamanagement.techtarget.com/tip/SQL-vs-NoSQL-database-design-debate-isnt-even-a-real-fight.

Technology and Disruption

Late last year, I read an article in the Harvard Business Review on disruptive innovation. I thought it needed correction and decided to post my response on LinkedIn.