Last Friday’s BBBT presentation by an ensemble cast from Rocket Software was interesting, in both good and bad meanings of that word. They have some very interesting products that address the business intelligence (BI) industry, but they also have some confusion.

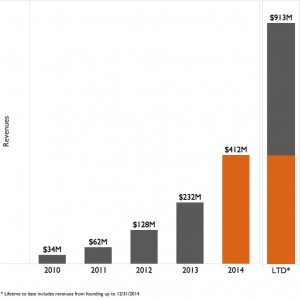

Bob Potter, SVP and GM, Business Intelligence, opened the presentation by pointing out that Rocket has more than $300 million (USD) in annual revenue yet many tech folks have never heard of them. One reason for the combination is they’ve done a good job in balancing both build and buy decisions to provide niche software solutions in a variety of places and on a number of platforms. Another is a strong mainframe focus. The third is that they don’t seem to know how to market. Let’s focus on just the two products presented to demonstrate all of these.

Rocket Data Virtualization

Most of the presentation was focused on Rocket Data Virtualization (DV). There are two issues it addressed. The first is accessing data from multiple sources without the need to first build a data warehouse. DV is the foundation of what was first thought of as the federated or virtual data warehouse. It’s useful. Gregg Willhoit, Managing Director, Research & Development, gave a good overview of DV and then delved into the product.

Rocket Data Virtualization is a mainframe resident product to enhance data virtualization, running on IBM z. While this has the clear market limit of requiring a company large enough to have a mainframe, it’s important to consider this. There are still vast amounts of applications running on mainframes and it’s not just old line Cobol. Mainframes run Unix, Linux and other OS partitions to leverage multiple applications.

An important point was brought up when Gregg was asked about access to the product. He said that Rocket is working with other BI industry partners, folks who provide visualization, so that they can access the virtualized data.

However, if you want to know more about the product, good luck. As I’ll discuss in more detail later, if you go to their site you’ll find all marcom fluff. It’s good marcom fluff, but driving deeper requires downloads or contacting sales people. That doesn’t help a complex enterprise sale.

Rocket Discover

The presentation was turned over to Doug Anderson, Solutions Engineer, for a look at their unreleased product Rocket Discover. It’s close, in beta, but it’s not yet out.

As the name implies, Rocket Discover is their version of a visualization tool. It’s a very good, basic tool that will compete well in the market except for two key things. The first is that they claimed Rocket is aiming at “high level executives” and that’s not the market. This is a product for business analysts. Second, while it has the full set of features that modern analysts will want, it’s based on a look and feel that’s at least a decade old.

On the very positive side, they do have a messaging feature built in to help with collaboration. It needs to grow, but this is a brand new product and they have seen where the market is going and are addressing it.

Another positive sign is this isn’t a mainframe product. It runs on servers (unspecified) and they’re starting with both on-premises and cloud options. This is a product that clearly is aimed at a wider market than they historically have addressed.

While they have understood the basics of the technology, the question is whether or not they understand the market. One teaser that shows that they probably don’t was brought up by another analyst who pointed out that Doug and others were often referring to the product as just Discover. Oracle has had a Discover product for many years. While Rocket might not have seen it on the mainframe, there will be some marketing issues if the company doesn’t always refer to the product as Rocket Discover, and they might have problems anyway. Their legal and marketing teams need to investigate quickly – before release.

Enterprise IT v Enterprise Software: Understanding the Difference

The product presentation and a Q&A session that covered more issues with even more folks from Rocket taking part, show the problems Rocket will have. As pointed out, the main reasons that so many people have never heard of Rocket is it sells very technical solutions to enterprise IT. Those are direct sales to a very technical audience. However, enterprise software is more than enterprise IT.

Enterprise software such as ERP, CRM, SFA and, yes, BI, address business issues with technology. That means there will be a complex sales cycle involving people from different organizations, a cycle that’s longer and more involved than a pure sale to IT. I’m not sure that Rocket has yet internalized that knowledge. As mentioned above, their website is very fluffy, as if the thought is that you put something pretty (though I argue against the current fad of multiple bands requiring scrolling, it’s neither pretty nor easy to use) with mission and message only, then you quickly get your techies talking directly to their techies, is the way you sell. Perhaps when talking with techies only, but not in an enterprise sale.

That’s my biggest gripe about the software industry not understanding the need for product marketing. You must be able to build a bridge to both technical and business users with a mix of collateral and content that span the gap. I’m not seeing that with Rocket.

In addition, consider the two products and the market. DV is very useful and there are multiple companies trying to provide the capability. While Rocket’s knowledge of and access to mainframe data is a clear advantage, the fact the product only runs on mainframes is a very limiting competitive message. I understand they have tied their horses very closely to IBM, and it makes sense to have a z option, but to not provide multiple platforms or a way for non-mainframe customers to use their more general concepts and technologies will retard growth.

If their plan is to provide what they know first then spread to other platforms, it’s a good strategy; but that wasn’t discussed.

Both products, though, have the same marketing issue. Rocket needs to show that it understands it is changing from selling almost exclusively to enterprise IT and needs to create a more integrated product marketing message to help sell to the enterprise.

There’s also the issue of how to balance the messages for the two products. For Rocket Data Virtualization to succeed, it really does need to work with the key BI vendors. Those companies will wonder about Rocket’s dedication to them while Rocket Discover exists. Providing a close relationship with those vendors will retard Rocket Discover’s growth. Pushing both products will be walking a tightrope and I haven’t seen any messaging that shows they know it.

Summary

Rocket is a company that is very strong on technology that helps enterprise IT. Both Rocket Data Virtualization and Rocket Discover have the basics in place for strong products. The piece missing is an understanding of how to message the wider enterprise market and even the mid- and small-size company markets.

Rocket Data Virtualization is the product that has the most immediate impact with the clear differentiation of very powerful access to mainframe data and the product I think should make the more rapid entrant into its space. The question is whether or not they can spread platform support past the mainframe faster than other companies will realize the importance of mainframe data. In the short term, however, they have a great message if they can figure out how to push it.

Rocket Discover is a very good start for a visualization tool, but primarily on the technology side. They need to figure out how to jump forward in GUI and into predictive and other analytics to be truly successful going forward, but the market is young and they have time.

The biggest issue is if Rocket will learn how to market and sell in broader enterprise and SMB sales, both to better address the multiple buyers in the sales cycle and to better communicate how both products interact in a complex market place.

Rocket is worth the look, they just need to learn how to provide the look to the full market.