The latest TDWI webinar was on embedded analytics. The speakers were Fern Halper, the director of TDWI research for advanced analytics, and Mark Gamble from OpenText. For those of you who hadn’t heard, Actuate was acquired by OpenText and is being rebranded but, according to Mark, will remain an independent division for now.

Ms. Halper’s main point is that embedded has a lot of different meanings for different audiences and that she wants to create a clear framework for understanding the terminology within the analytics space. She’s clear that what’s meant isn’t just into the mass market idea of wearable software, but that analytics can be embedded in specific applications, broader systems and, yes, devices such as mobile and wearable items.

Early in the presentation she presented a two axis image comparing structured and unstructured data combined with human and machine generated data. While I think the coloring should rotate, to emphasize that the difference between machine versus human generated information is a bigger issue than structured v unstructured, it’s a nice way of understanding some of the data streams.

That, however, was a definitional slide and discussion. The real mean of Fern Halper’s presentation was the framework she described to help understand the steps of embedding analytics.

Operationalized analytics are those that are involved in the full process of decision making. For instance, a call center employee might be talking to a prospect whose finances are flagged as a question mark. That prospect must be sent to another person to process the decision based on analytics.

Integrated analytics are those that allow the call center operator to see the analysis and immediately make decisions based upon guidelines.

Automated analytics are those that provide the operator with a decision tree response based on analytics done behind the scenes.

The only issue I take with the framework is it doesn’t necessarily mean true real time. The example discussed shows that the integrated approach can be real time for what humans think of as real time within our own interactions. Meanwhile, real-time might not be a necessary component to some automated decisions. Real-time is a separate issue and I think Fern’s framework would be better served by eliminating that item.

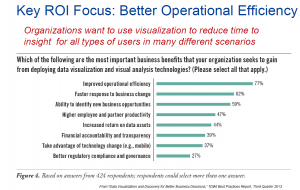

Fern Halper followed the framework with the usual and interesting TDWI survey numbers. This time, the questions were focused on the adoption of analytics tied to the framework. The numbers showed the unsurprising fact that analytics adoption is still in its infancy. One of the great parts of TDWI’s numbers is they show the reality which contradicts the industry’s hype.

One set of numbers I’d like to see wasn’t included. The responses were only IT responses in general, who has started using what analytics. I would have loved to see one slide that clearly showed only the sub-segment of companies who are already using analytics tools and where those companies are within Ms. Halper’s framework. Are are the bleeding edge folks doing at moving through the framework to automated solutions?

OpenText

The rest of the program was a fast presentation by Mark Gamble, pointing to OpenText’s (Actuate’s) main benefit claim of enterprise scalability and the other factors. One of the phrases I liked was his reference stating they “adhered to a low code methodology.” It’s nice to hear folks admitting that as much as we want to eliminate coding, some of that is still required. Honesty isn’t a negative in marketing and I liked that turn of phrase.

In the other direction, he mentioned there were over fourteen million downloads of BIRT and that the company “believes” they have over three million users. I’m not interested in belief but they don’t seem to have a clear figure on adoption.

The main problem I had was the demo. Mark showed experimental work positing to show live acquisition of basic automotive information such as speed and RPM displayed on a computer, phone and watch. It was not only not a business case but one that seemed to go back to the misunderstanding about the meaning of embedded which was addressed by Fern. Yes, it was embedded on two devices, but the demo didn’t show how it might be embedded in business applications. It stuck with the flashy concept of wearables.

OpenText might have something good with their analytics portability, but I don’t think the demo presents it to a business audience. Yes, techies will understand the underpinnings that make it cool, but the business folks writing checks need to see something that justifies the expenditure and I don’t think that’s shown.

Summary

Fern Halper did another good job of putting the adoption of analytics into perspective. This time, with a framework for better understanding embedded analytics.

Mark Gamble did a passable job of presenting OpenText’s solution but I feel he must do a better job of figuring out a business message.

TDWI’s data shows the early state of adoption that exists in the market. Fern Halper’s framework will help companies better understand how to move into the arena, but only if the companies providing those solutions can better present how they’ll help solve business issues.