Last Friday’s visitors to the BBBT were from Teradata Aster. As you’ve noticed, I tend to focus on the business aspects of BI. Because of that, this blog entry will be a bit shorter than usual.

That’s because the Teradata Aster folks reminded me strongly of my old days before I moved to the dark side: They were very technical. The presenters were Chris Twogood, VP, Product and Services Marketing, and Dan Graham, Technical Marketing.

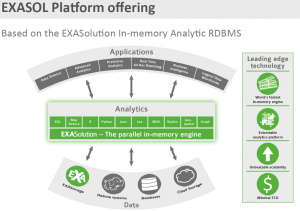

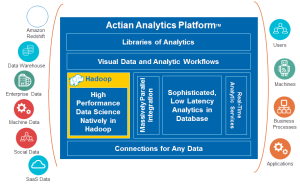

Chris began with a short presentation about Aster. As far as it got into marketing was pointing to the real problem concerning the proliferation of analytic tools and that, as with all platform products, Aster is an attempt to find a way to address a way to better integrate a heterogeneous marketplace.

As with others who have presented to the BBBT, Chris Twogood also pointed out the R and other open source solutions aren’t any more sufficient for a full BI solution managing big data and analytics that are pure RDBMS solutions, so that a platform has to work with the old and the new.

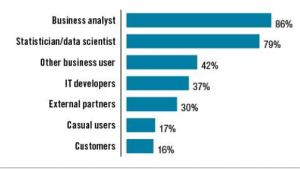

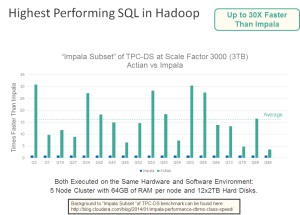

The presentation was then handed over to Dan Graham, that rare combination of a very technical person who can speak clearly to a mixed level audience. His first point was a continuation of Chris’, speaking to the need integrate SQL and Map Reduce technologies. In support of that, he showed a SQL statement he said could be managed by business analysts, not the magical data scientist. There will have to be some training for business analysts, but that’s always the case in a fast moving industry such as ours.

Most of the rest of the presentation was about his love of graphing. BI is focused on providing more visual reporting of highly complex information, so it wasn’t anything new. Still, what he showed Teradata focusing upon is good and his enthusiasm made it an enjoyable presentation even if it was more technical than I prefer. It also didn’t hurt that the examples were primarily focused on marketing issues.

The one about which I will take issue is the wall he tried to set between graph databases and the graph routines Aster is leveraging. He claimed they’re not really competing with graph databases which was, Dan posited, because they are somehow different.

I pointed out that whether graphs are created in a database, in routines layered on top of SQL or in Java, or were part of a BI vendor’s client tools only mattered in a performance standpoint, that they were all providing graphical representations to the business customer. That means they all compete in the same market. Technical distinctions do not make for business market distinction other than as technical components of cost and performance that impact the organization. There wasn’t a clear response that showed they were thinking at a higher level than technological differences.

Summary

Teradata has a long and storied history with large data. They are a respected company. The question is whether or not they’re going to adapt to the new environments facing companies with the explosion of data that’s primarily non-structured and having a marketing focus. Will they be able to either compete or partner with newer companies in the space.

Teradata is a company who has long focused on large data, high performance database solutions. They seem to clearly be on the right path with their technology and the implications are that they are in their strategic and marketing focus. They built their name focused on large databases for the few companies that really needed their solutions. Technology came first and marketing was almost totally technically focused on the people who understood the issue.

The proliferation of customer service and Web data mean that the BI market is addressing a much wider audience for solutions managing large amounts of data. I trust that Teradata will build good technology, but will they realize that marketing has to become more prominent to address a much larger and less technical audience? Only time will tell.