In memory! Big Data! Data Analytics! The Death of BI!!!!

We in technology love the new idea, the new thing. Often that means we oversell the new. People love to talk about technology revolutions, but those are few and far between. What usually happens is evolution, a new take on an existing idea which can add new value to the same concept. The business intelligence space is no more immune to the revolution addiction that is any other area, but is it real?

Let’s begin to answer that by starting with the basic question: What is BI? All the varied definitions come down to the simple issue that BI is an attempt to better understand business through analyzing data. That’s not a precise definition but a general one. IT was doing business analysis before it was called that, it was either in individual systems or ad hoc through home grown software. Business intelligence became a term when MicroStrategy, Cognos, Business Objects and others began to create applications that could combine information across systems and provide a more consistent and consolidated view of that data.

Now we have a new generation of companies bringing more advance technologies to the fore to improve data access, analysis and presentation. Let’s take a look at a few of the new breed and understand if they’re evolution or revolution.

Some Examples

In-Memory Analysis

As one example, let’s discuss in-memory data analysis as headlined by QlikTech, Tableau and a few others. By loading all data to be analyzed into memory, performance is much faster. The lack of caching and other disk access means blazingly fast analytics. What does this mean?

The basic response is that more data can be massaged without appreciable performance degradation. We used to think nothing of getting weekly reports, now a five second delay from clicking a button to seeing a pie chart is considered excessive. However, is this a revolution? Chips are far faster than they were five years ago, not to mention twenty. However, while technologies have been layered to scale more junctions, transistors, etc, into smaller spaces, it’s the same theory and concept. The same is true of faster display of larger data volumes. It’s evolutionary.

Another advantage of the much faster performance is that you can do calculations that are much more complex, from simple pivot tables to being able to handle scatter charts with thousands of points is a change in complexity. However, it’s just another way of providing business information to business people in order to make decisions. It’s hard to find a way to describe that as revolutionary, yet some do.

Big Data

Has there been a generation of business computing that hasn’t thought there was big data? There’s a data corollary to Parkinson’s law which says that data expands to fill available disk space. The current view of big data is just the latest techniques that allow people to massage the latest volumes of data being created in our interconnected world.

Predictive Analytics

Another technical view of business is because software has historically been limited to describing what’s happened that business leaders weren’t using it for predictive analysis. Management has always been the combination of understanding how the business has performed and then predicting was would be needed for future market scenarios in order to plan appropriately. Yes, there are new technologies that are advancing the ability of software to provide more of the analytics to help with prediction, but it’s a sliding of the bar allowing software to do a bit more of what business managers had been doing through other means.

But there’s something new!

Those new technologies and techniques are all advancing the ability for business people to do their jobs faster and more accurately, but we can see how they are extensions of what’s happening. Nobody is saying “Well, I think I’ll dump my old dashboards and metrics and just use predictive analysis!” The successful new vendors are still providing everything described in “legacy BI systems.” It’s extension, not replacement – and that’s further proof of evolution in action.

Visio (ask your parents…) no longer exists, while Crystal Reports (again…), Cognos, Business Objects and others have been absorbed by larger companies to be used as a must-have in any business application. In the same way, none of the current generation of software companies is saying that the latest analytics techniques are sufficient. They all support and recreate the existing body of BI tools into their own dashboards and interfaces. The foundation of what BI means keeps expanding.

“Revolutionary”?

It’s really very simple, technology companies tend to be driven by technologists. Stunning, I know. Creating in-memory analytics was a great piece of technical work. It hadn’t been successfully done until this generation of applications. We knew were where getting more and more data accumulated from the internet and other sources, then some brilliant people thought about some algorithms and supporting technologies that let people analyze the data in much faster ways. People within the field understood that these technical breakthroughs were very innovative.

On the other side, there’s marketing. Everyone’s looking for the differentiator, the thing that makes your company stand out as different. Most marketing people don’t’ have the background to understand technology, so when they hear that their firm is the first, or one of the first, to think of a new way of doing things, they can’t really put it into context. New becomes unique becomes revolutionary. However, that’s dangerous.

Well, not always.

To understand that, we must refer to one of the giants in the field, Geoffrey Moore. In “Crossing the Chasm” and “Inside the Tornado,” Moore formally described something that many had felt, that there’s a major difference between early adopters and the mass market. There’s a chasm that a company must cross in order to change from addressing the needs of the former to the needs of the later.

The bleeding edge folks want to be revolutionary, that they’re visionaries. Telling them that they need to spend a lot of money and a lot of sleepless nights on buggy new software because it’s a step in the right direction doesn’t work quite as well as telling them it’s a revolutionary approach. As the founders like to think of themselves in the same place, it’s a natural fit. We’ve all joined the revolution!

There’s nothing wrong with that – if you are prepared for what it entails. There are people who are willing to get the latest software. Just make sure you understand that complexity and buggy young code doesn’t mean a revolutionary solution, just a new product.

Avoiding Revolution

Then there’s the mass market. To simplify Moore’s texts, business customers want to know what their neighbors are doing. IT management wants to know that their always understaffed, underfunded and overworked department isn’t going to be crushed under an unreasonable burden. Both sides want to know their investment brings a proper ROI in an appropriate time frame. They want results with as little pain as possible.

We’ve seen that the advances are in BI, not past BI. Yet so many analysts are crying the death of something that isn’t dying. How does that help the market or younger companies? It points people in the wrong way and slows adoption.

Not all companies have fallen for that. Take one of the current companies making the most headway with new technologies, Tableau Software. They clearly state, on their site, “Tableau is business intelligence software that allows anyone to connect to data in a few clicks, then visualize and create interactive, sharable dashboards with a few more.” They have not shied away from the label. They’re not trying to replace the label. They’re extending the footprint of BI to do more things in better ways. Or QlikTech’s self-description “QlikTech is the company behind QlikView, the leading Business Discovery platform that delivers user-driven business intelligence (BI).”

There are many companies out there who understand that businesses want to move forward as smoothly as possible and that evolution is a good thing. They’ll talk to you about how you can move forward to better understand your own business. They’ll show you how your IT staff can help your business customers while not becoming overwhelmed.

Know if you’re willing to be an early adopter or not, then question the vendors accordingly. As most of you are, purely by definition, in the mass market, move forward with the comfort that BI is not going anywhere and plenty of companies are merging the old and new in ways that will help you.

To paraphrase Mark Twain, the reports of BI’s death have been greatly exaggerated.

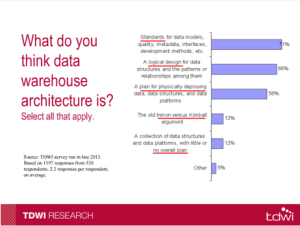

Another key point was made as to the evolving nature of the definition of data warehousing. Twenty years ago, it was about creating the repository for combining and accessing the data. That is now definition number three. The top two responses show a higher level business process and strategy in place than “just get it!”Where I have a problem with the presentation is when Mr. Russom stated that analytics are different than reporting. That’s a technical view and not a business one. His talk contained the reality that first we had to get the data, now we can move on to more in depth analysis, but he still thinks they’re very different. It’s as if there’s a wall between basic “what’s the data” and “finding out new things,” concepts he said don’t overlap. Let’s look at the current state of BI. A “report” might start with a standard layout of sales by territory. However, the Sales EVP might wish to wander the data, drilling down and slicing & dicing to understand things better by industry in territory, cities within and other metrics across territories. That combines what he defines as separate reporting and data discovery.

Another key point was made as to the evolving nature of the definition of data warehousing. Twenty years ago, it was about creating the repository for combining and accessing the data. That is now definition number three. The top two responses show a higher level business process and strategy in place than “just get it!”Where I have a problem with the presentation is when Mr. Russom stated that analytics are different than reporting. That’s a technical view and not a business one. His talk contained the reality that first we had to get the data, now we can move on to more in depth analysis, but he still thinks they’re very different. It’s as if there’s a wall between basic “what’s the data” and “finding out new things,” concepts he said don’t overlap. Let’s look at the current state of BI. A “report” might start with a standard layout of sales by territory. However, the Sales EVP might wish to wander the data, drilling down and slicing & dicing to understand things better by industry in territory, cities within and other metrics across territories. That combines what he defines as separate reporting and data discovery.